Instagram’s algorithms are actively promoting networks of pedophiles who commission and sell child sexual abuse content on Meta’s popular image sharing app.

A joint investigation from The Wall Street Journal and academics at Stanford University and the University of Massachusetts Amherst has revealed the extent to which Instagram’s recommendation systems “connects pedophiles and guides them to content sellers.”

Accounts found by the researchers are advertised using blatant and explicit hashtags like #pedowhore, #preteensex, and #pedobait. They offer “menus” of content for users to buy or commission, including videos and imagery of self-harm and bestiality. When researchers set up a test account and viewed content shared by these networks, they were immediately recommended more accounts to follow. As the WSJ reports: “Following just a handful of these recommendations was enough to flood a test account with content that sexualizes children.”

“Following just a handful of these recommendations was enough to flood a test account with content that sexualizes children.”

In response to the report, Meta said it was setting up an internal task force to address the issues raised by the investigation. “Child exploitation is a horrific crime,” the company said. “We’re continuously investigating ways to actively defend against this behavior.”

Meta noted that in January alone it took down 490,000 accounts that violated its child safety policies and over the last two years has removed 27 pedophile networks. The company, which also owns Facebook and WhatsApp, said it’s also blocked thousands of hashtags associated with the sexualization of children and restricted these terms from user searches.

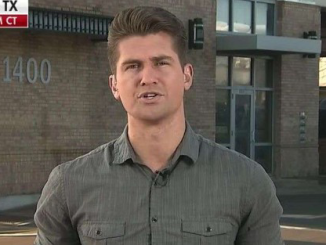

Alex Stamos, head of Stanford’s Internet Observatory and former chief security officer for Meta, told the WSJ that the company can and should be doing more to tackle this issue. “That a team of three academics with limited access could find such a huge network should set off alarms at Meta,” said Stamos. “I hope the company reinvests in human investigators.”

In addition to problems with Instagram’s recommendation algorithms, the investigation also found that the site’s moderation practices frequently ignored or rejected reports of child abuse material.

Meta said it is investigating the issues raised in the report

The WSJ recounts incidents where users reported posts and accounts containing suspect content (including one account that advertised underage abuse material with the caption “this teen is ready for you pervs”) only for the content to be cleared by Instagram’s review team or told in an automated message: “Because of the high volume of reports we receive, our team hasn’t been able to review this post.” Meta told the Journal that it had failed to act on these reports and that it was reviewing its internal processes.

The report also looked at other platforms but found them less amenable to growing such networks. According to the WSJ, the Stanford investigators found “128 accounts offering to sell child-sex-abuse material on Twitter, less than a third the number they found on Instagram” despite Twitter having far fewer users, and that such content “does not appear to proliferate” on TikTok. The report noted that Snapchat did not actively promote such networks as it’s mainly used for direct messaging.

David Thiel, chief technologist at the Stanford Internet Observatory, told the Journal that Instagram was simply not striking the right balance between recommendation systems designed to encourage user and connect users, and safety features that examine and remove abusive content.

“You have to put guardrails in place for something that growth-intensive to still be nominally safe, and Instagram hasn’t,” said Thiel.

* Article From: The Verge